Since Janet introduced her holistic testing model, we’ve had several practitioners tell us, “This describes what we do so well! Thank you for explaining this – I can use this model to explain to others!” This approach resonates with people on teams who have learned ways to deliver small chunks of value to their customers frequently, at a sustainable pace.

Software products are growing more complicated, more complex, embracing technologies that let us understand and solve our customers’ problems. We want to do as much testing as we can when build new capabilities. We also need to take advantage of newer technologies that let us learn about those problems our customers encounter and respond with new solutions quickly.

This is our definition from a few years ago, crafted with input from many in the testing community, and we value what we labelled “agile testing”.

Collaborative testing practices that occur continuously, from inception to delivery and beyond, supporting frequent delivery of value for our customers. Testing activities focus on building quality into the product, using fast feedback loops to validate our understanding. The practices strengthen and support the idea of whole team responsibility for quality.

We believe in the same ideas and have continued to learn over the years, and share those ideas. There are many teams who practice agile, DevOps, or whatever name you want to call it, and don’t really think about testing. Many folks start their first job and have never experienced waterfall – only agile, so it becomes less important to differentiate between agile and waterfall.

“Holistic testing” is a more comprehensive term to encompass the feedback loops. The “whole team” today can include UX designers, programmers, testers, as well as site reliability engineers. All members of a delivery team are thinking about testing from the beginning of the cycle, including how we should instrument our code to provide information about how it’s really behaving in production. Many teams are watching dashboards, alerts, digging into huge amounts of data to identify and quickly resolve issues. We’re not only concerned with the “average” customer experience. We want to ensure that all customers are having a good experience.

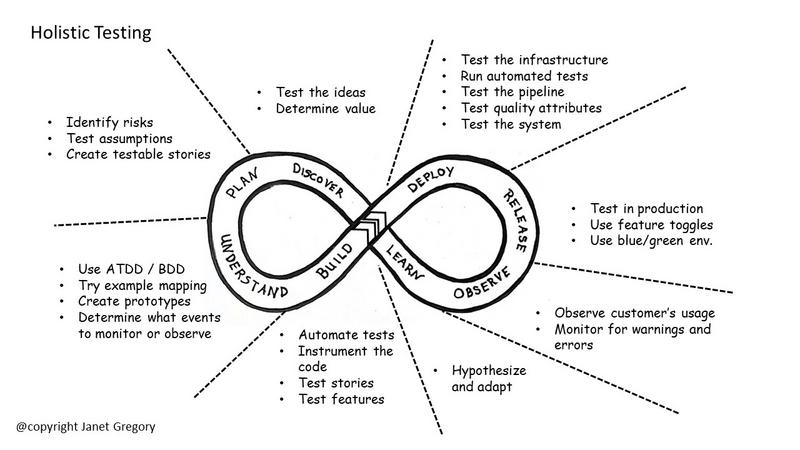

This holistic testing model is a way to think about testing throughout the development cycle, and was inspired by Dan Ashby’s “We test here” model.

The left side of the loop is about building quality into our products. The right side is testing to see that we got it right and adapting if we didn’t. The examples used in this diagram are just that – examples. We feel that this model balances testing early with testing after code is built.

The conversation starts with what level of quality do we need, and then what kinds of testing do we need to have to support that level of quality.

We continue to adapt the model, but for now, it encompasses testing activities as we see it. We’d love to hear how you test differently, and how this model might help you visualize the types of testing you do in your product. Every product team has a slightly different context, so choosing what types of testing you do, or how much you do will be very specific for your team.

With this model in mind, we are rebranding our course - from "Agile Testing for the Whole Team" to "Holistic Testing: Strategies for agile teams". We're hoping the shift will help people think more about building quality in, and how testing supports that effort.

Download our free mini ebook, Holistic Testing: Weave Quality into Your Product, to learn how your team can apply the model to build an effective testing strategy. (Just scroll down a bit on the home page and click on the Download button - no personal information required!) We've received positive feedback from many organizations who've used a holistic testing approach to build quality into their software products.