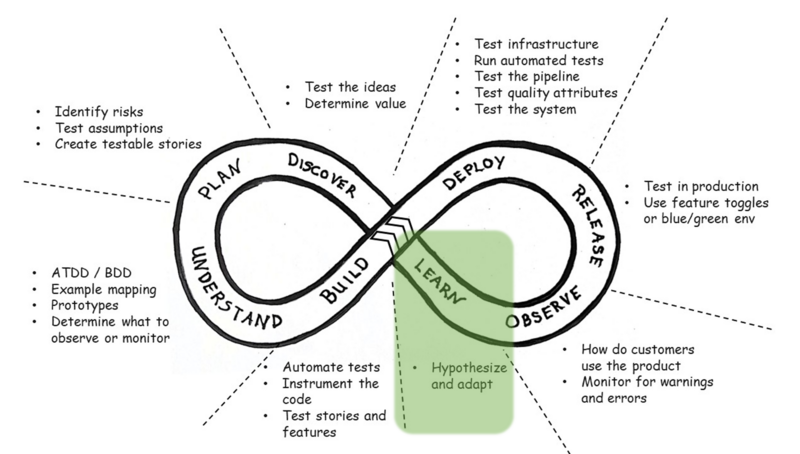

Agile teams continually learn and apply those learnings to adapt their product and process. There’s often a tendency for teams to release a new feature and then move on to building the next new feature. This means missing a huge opportunity to learn from how customers experience that new feature in production, and whether it solves their problems.

As the holistic testing infinite loop cycles around the right side and back towards the left, we use the information about production usage to drive changes that will solve customer problems. A significant production outage might be followed up with a retrospective (some people call these postmortems, but we hope nobody died). We recommend that teams use visual collaboration tools as they explore issues like this. Root cause analysis tools such as fishbone or Ishikawa diagrams (also called fishbone diagrams) may be helpful. Learning outcomes can be used in the discovery stage to come up with ideas that address the root problems.

We can learn much more from production usage observations besides outages and system unavailability. For example, analytics can tell us how many customers tried out a new feature. Today’s tools can even show us where customers struggled with a user interface. We can analyze data for our service level indicators to see if objectives and service level agreements were met. Use retrospectives and brainstorming meetings to understand these better and prioritize the most important challenges to address.

A technique that has worked well for Lisa’s team is using “small, frugal experiments”, something Lisa learned from Linda Rising’s talk at Agile 2015 (you can see the slides, if you are an Agile Alliance member). Once your team has identified the challenge you want to address and dug into the associated issues to understand the problem better, design one or more small experiments to make progress towards that goal or make that problem smaller. These experiments should only last up to a few weeks, so that if they don’t work, you haven’t invested much time and you’ve learned something from them I any case.

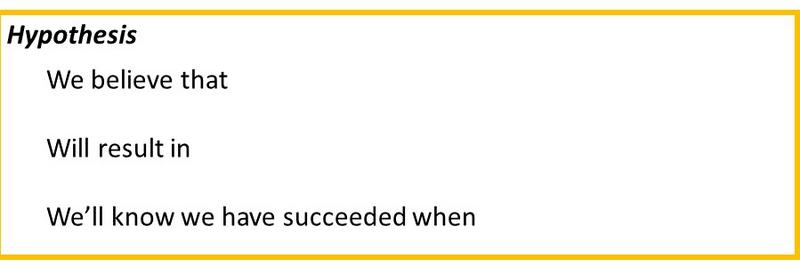

We like to use this format for a hypothesis:

We believe that <an action that we are going to take>

Will result in <some aspect of progress towards addressing a challenge or achieving a goal>

We’ll know we have succeeded when <a concrete measurement that will show progress towards the goal>

For example, let’s say our team has seen a high number of 500 errors in production on our web-based application. A 500-error means reduced availability, so our system availability is 99.7% instead of our objective of 99.9%. We investigate further to be sure that the 500 errors were a significant cause of some downtime. We might have a hypothesis such as:

We believe that instrumenting our code to capture all events leading up to 500 errors

Will lead to faster diagnosing and fixing 500 errors in production

We’ll know we have succeeded when our availability metric is up to 99.8% within three weeks

If our availability metric wasn’t increasing within three weeks, then we’d know there might be another cause to our downtime, or we need to focus on preventing the 500 errors instead. We can try more small experiments.

Another example: Our analytics show that customers are slow to try out new features that we release or do not use them at all. If we couldn’t get access to our customers to ask why they weren’t using the new features, as a team, including designers and product owner, we decide to incorporate usability testing before releasing new features. Our hypothesis:

We believe that collaborating with designers to do usability testing of each new feature before release

Will lead to more customers trying out new features right away

We’ll know we have succeeded when 20% of customers try our next new feature within 48 hours of release

There are many ways to learn from production usage and guide future product changes. Getting the whole team, including testers, programmers, designers, product owners and operations specialists, collaborating to learn is key. Whatever method you choose to continually improve, it is important that you have some way to measure progress and know when you have succeeded.