Service level language is a way an organization can set targets for different aspects of product quality, especially areas like availability and performance. Both of us have worked in organizations that have used SLAs (Service-level agreements), but we’ve learned more detail from Abby Bangser who says “Operations engineers improved how they measure quality as complexity and expectations have grown.”

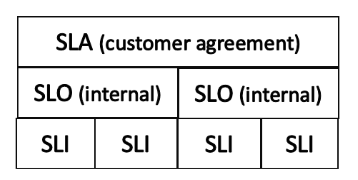

Three high-level definitions that are important to know are:

* Service-level indicator (SLI): ways to measure success and make informed decisions

* Service-level objective (SLO): targets and priorities for quality and performance

* Service level agreement (SLA): customer agreement, along with associated penalties

Service-Level Indicator (SLI)

An SLI is a measure of an aspect of the level of service provided. It is a service level indicator—a carefully defined quantitative measure of some aspect of the level of service that is provided.

* Feedback from production and test environments

* Monitor thresholds for each service’s availability; investigate anomalies

* Typical metrics include availability, latency, throughput, error rate, durability (long-term data retention). Examples:

- successful requests as a % of all requests

- ratio of home page requests that loaded in < 100 ms.

* Testers can ask questions and get examples to identify meaningful SLIs

Latency is the amount of time to get through a pipeline line including any introduced delays

Most services consider request latency—how long it takes to return a response to a request—as a key SLI.

Throughput is the number and size of items that can be sent at any one time through a pipeline and is typically measured in requests per second.

Other common SLIs include the error rate, often expressed as a fraction of all requests received. The measurements are often aggregated: i.e., raw data is collected over a measurement window and then turned into a rate, average, or percentile.

Another kind of SLI important to SREs is availability, or the fraction of the time that a service is usable. It is often defined in terms of the fraction of well-formed requests that succeed, sometimes called yield.

Service-Level Indicator (SLI)

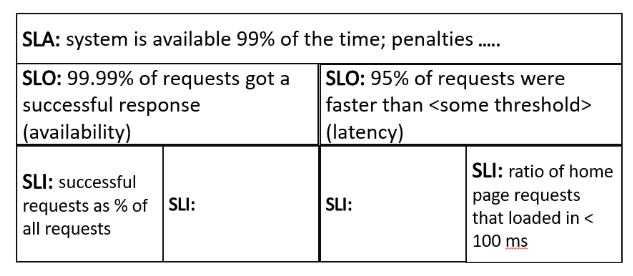

SLO’s are the objectives your organization sets for itself and are a big part of perceived product quality. Acceptable level of internal availability and is usually stricter than for external.

* A target that sets expectations for how a service will perform

- 99.99% of requests got a successful response (availability)

- 95% of requests were faster than <some threshold> (latency)

* Uses SLIs to catch problems before customers feel pain

* Generates conversations about how services are performing and what to prioritize

* Testers can participate in setting SLOs; get involved with monitoring and alerting

Teams should consider how to build their product to meet these objectives, and how they can instrument their code so that they know the current levels.

Service-Level Agreement (SLA)

SLAs are what your organization agrees to with their customers. Not meeting these agreements may cost the business money and likely cause pain for your customers. The customer agreement levels should be more easily attained than the SLOs, which are for internal use. They are tied to business and product decisions.

Examples:

* The system is available 99.9% of the time

* Financial penalties of $$$, if the agreement is not met (A few hours of downtime can be expensive)

SLAs are built on Service-Level Objectives and Service-Level Indicators

This next diagram shows some examples.

Customer experience

* SLIs reflect what customers experience - how they perceive the app is working

* Quantifying customer happiness metrics drives conversations across the organization

* Do SLIs drop but customers still are satisfied? Are you measuring the right things?

* Customer happiness may be measured by customer complaints, social media, or the number of completed transactions

A visible drop in the SLIs indicates problems that are affecting customers, they are feeling pain. If there are complaints from customers – “Pages are loading so slowly” or “We are seeing a lot of error page” and the SLIs don’t show any drop in level, there is something wrong with how the indicators are defined or how the SLIs are measured.

How does this relate to testing?

* Service level measurements reflect quality attributes

* Trends and anomalies guide future testing & coding

* Ask questions to drive service level conversations

As new features are planned, ask good questions to make sure the changes don’t adversely impact service levels, to understand the expected load on the system, to understand the risks, and to be prepared for unexpected problems. Testers are good question askers, and some questions that they can ask to help the team:

* What risks can we mitigate with tests?

* What risks may not be known until we’re in production?

* How much data do we need to store and how do we make sure it is secure?

If your organization doesn’t have any of these measures, try to get something in place, even one measure. Start small and pick one aspect that’s easy to measure. Identify people that care. Then iterate and set up a feedback loop so you can improve.

Reference: https://sre.google/sre-book/service-level-objectives/